- Unicorner

- Posts

- DeepCorner: Computation

DeepCorner: Computation

What does the future of computing look like?

Not to disturb your regular programming, but DeepCorner is back to look into the trends shaping tomorrow's startups.

It’s been a minute (and many new readers) since our last DeepCorner deep dive, so a refresher: earlier this year, we kicked off a new series of deep dives on all things deep tech, brought to you by none other than our writer Kanay Shah.

That first article had a lot of fans! Our second deep dive on American Dynamism was published shortly after. Kanay is back this week following a brief hiatus, diving into the question with the ever-evolving answer: what does the future of computing look like?

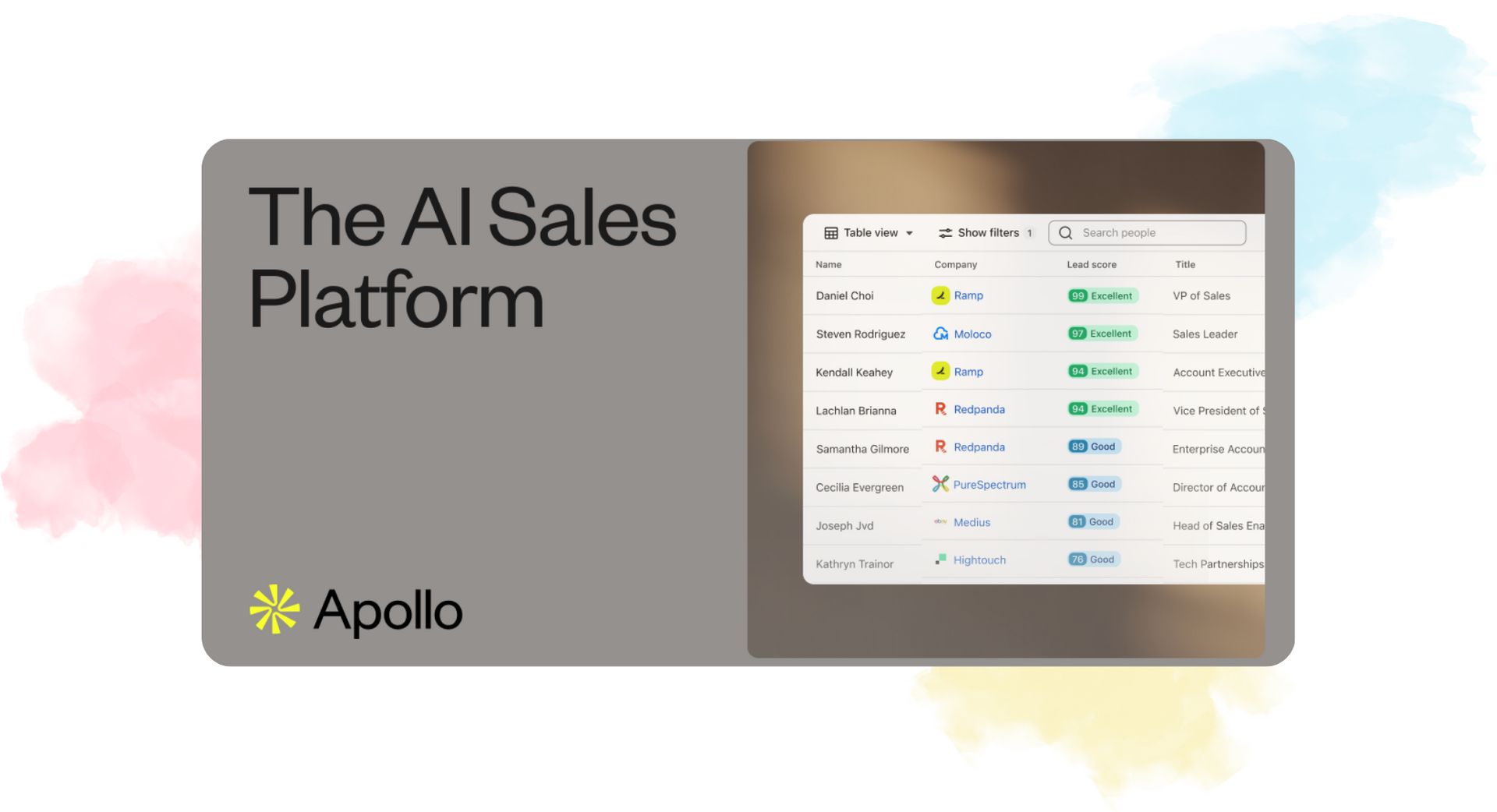

Running a startup means juggling dozens of tools. Apollo changed that for us, and it can for you, too. Their startup program offers 50% off year one for companies under 20 employees.

One platform handles your entire sales process: find prospects, send sequences, book meetings, track deals. No more switching between five different apps or losing leads in the shuffle.

It's like having a full sales team in your pocket.

This is sponsored content.

As much talk as there is about AI needs for computing power, the race to advance computational ability has long been underway.

In the previous edition of DeepCorner, we introduced the growing manufacturing layer, establishing the groundwork for companies to build on. Just like manufacturing creates a physical foundation, computational developments lay the technical foundation for innovation in all sectors. Today’s hottest industries, like AI, cybersecurity, blockchain, materials sciences, energy, biotech, aerospace, robotics, telecommunications, and many more, advance as manufacturing capabilities and computational innovation progress, and R&D is only increasing. Whereas manufacturing brings progress into reality, computation is the underlying key to unlocking the future.

Computation goes beyond silicon chips and NVIDIA. Today, we think of computation as silicon and semiconductors; however, the classical silicon computing paradigm is being challenged. New materials, architectures, and principles are emerging to power tomorrow’s innovation.

Today, we’re going to explore the frontiers of compute, and what the future of compute looks like.

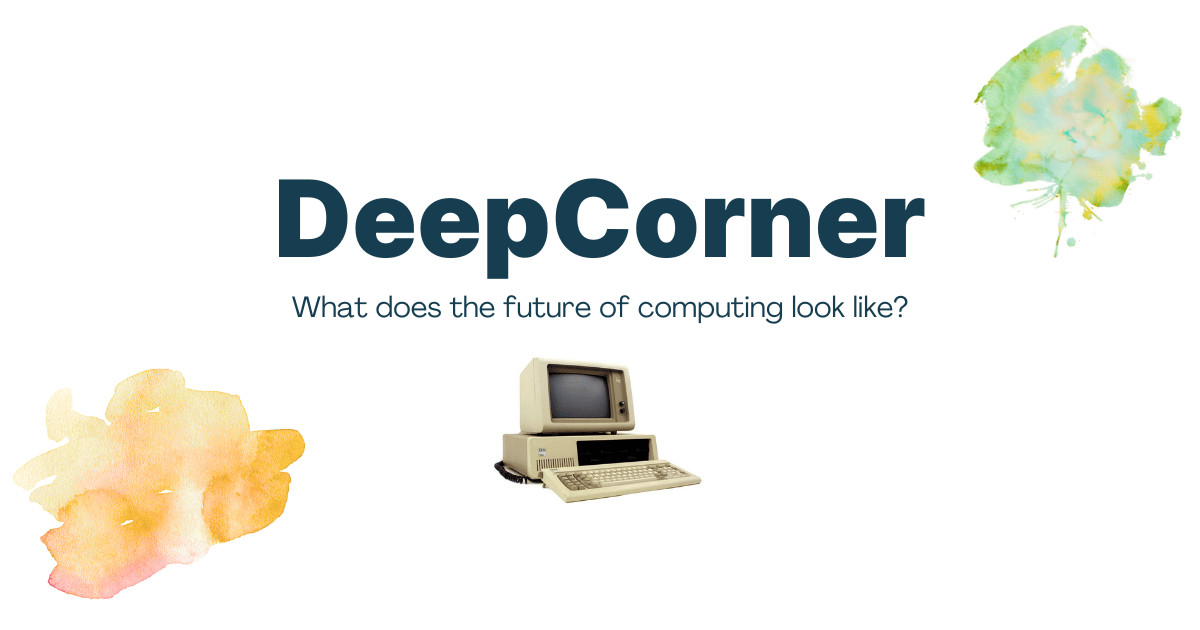

An antique abacus. (Computer History Museum)

Early computation was analog and manual. This is exemplified by tools like the abacus in Mesopotamia, and later mechanical innovations such as the ancient Greek Antikythera mechanism. The first development resembling a modern computer was the analytical engine, imagined by Charles Babbage and Ada Lovelace in the 19th century and based on advancements from figures like Blaise Pascal and Gottfried Wilhelm Leibniz in the 17th and 18th centuries.

The Anikythera mechanism fragments. (Brittanica)

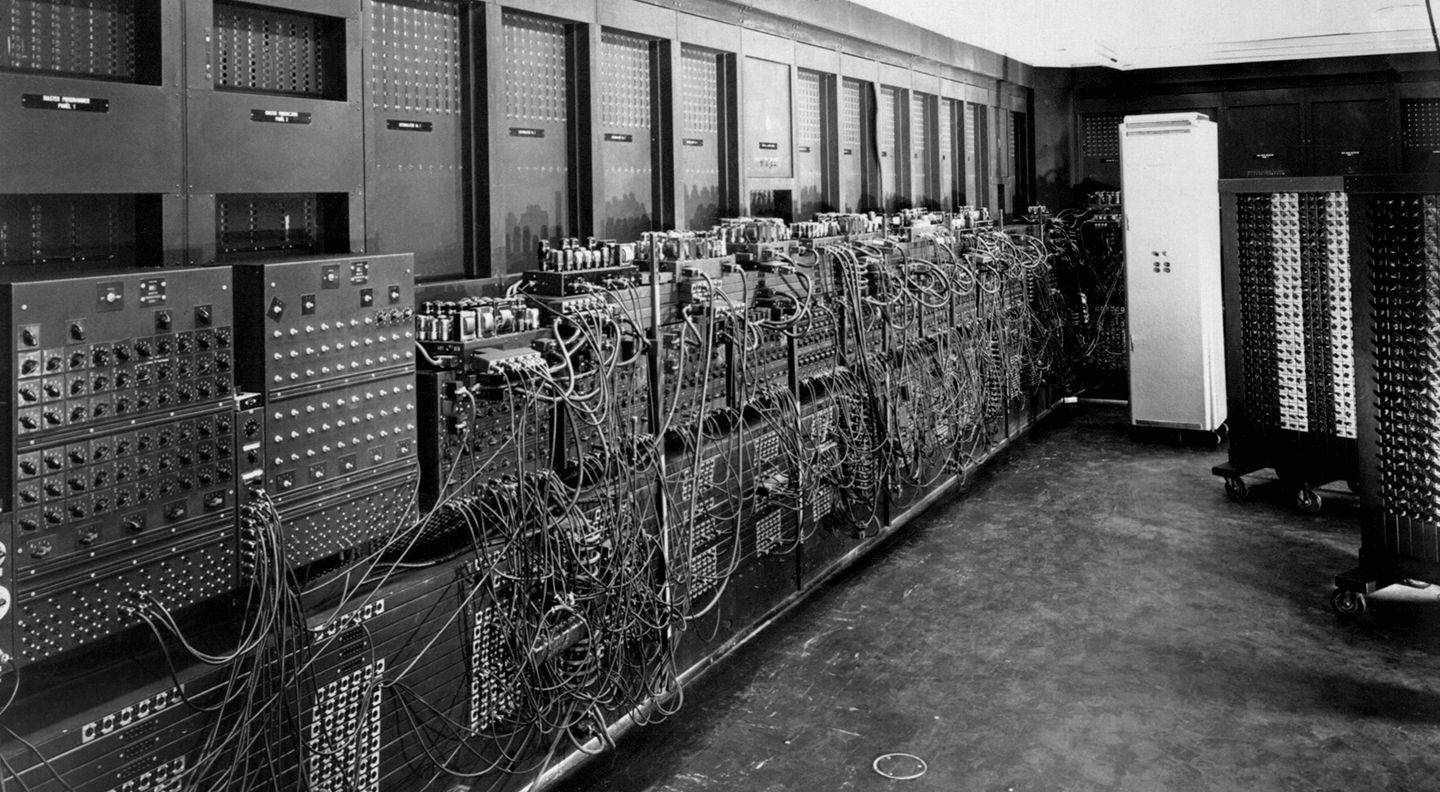

ENIAC, the first computer developed by the US Army. (U.S. Army)

In the 20th century, the concept of computation was first formalized by Alan Turing with the Turing machine, and we saw machines like the ENIAC and Colossus bring the concept of programmable electronic computers to reality. Progress continued with the invention of the transistor in 1947, which shrunk computing and ushered in rapid progress until what we have today: mainframes, personal computers, and mobile devices.

What a computer is to me is it’s the most remarkable tool that we’ve ever come up with, and it’s the equivalent of a bicycle for our minds.

Now, the field has expanded beyond classical computing into quantum, biological, and neuromorphic paradigms, with computation embedded in nearly every aspect of modern life.

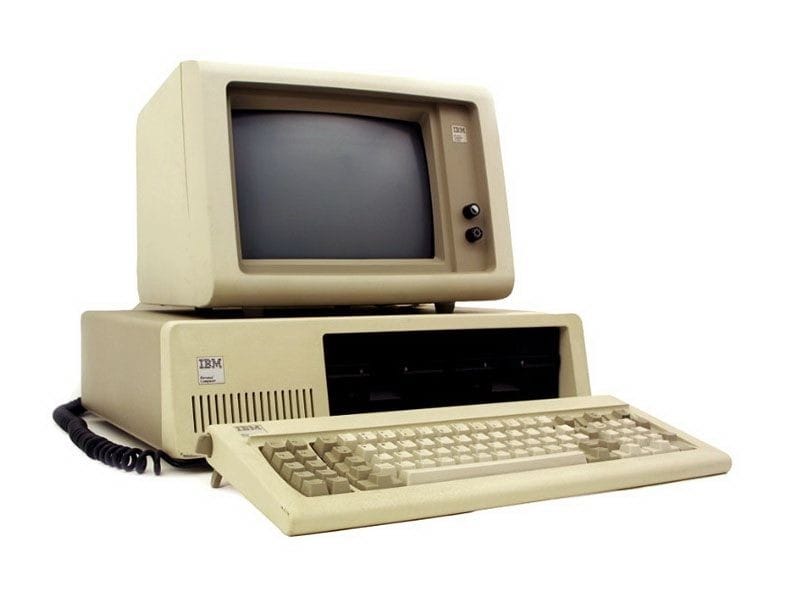

The IBM PC, the first widespread personal computer. (Computer History Museum)

Classical computation is likely what comes to mind when thinking about today’s computers: PCs, smartphones, and smartwatches, and scaling up to normal supercomputers, all use classical computation. Using electricity and communicating with binary logic, this is the most common form of computing there is today. Today’s smallest computers, all the way to supercomputers, are based on the same core architecture: silicon-powered chips.

Cortical Labs CL1 computer, a biological computer. (Tom’s Hardware)

One method of transcending the classical computer utilizes biomolecules like DNA, cells, and neural tissue.

The naturally small scale of these biomolecules gives way to immense capabilities. By copying how DNA can store swaths of data within nucleotide structures, or manipulating brain cells to solve problems, these computers are meant to take evolutionary advantages that nature has given different species and apply them to computation. While there is a direct application of biological computers to biological problems, such as drug delivery and therapeutics, there are other ways to apply this technology, such as AI development, informatics, and cryptography.

Primary players: Ginkgo Bioworks, Twist Bioscience, Cortical Labs, Evonetix

Similar to how biological computation harnesses nature for computation, chemical computation is based on chemical reactions. Specifically for biotechnological and medical applications, these computers can have millions of molecules that react simultaneously, making them massively parallel, solving problems faster. However, today, many systems are only single-use due to the environmental reactions that can occur, and are highly sensitive to outside variables.

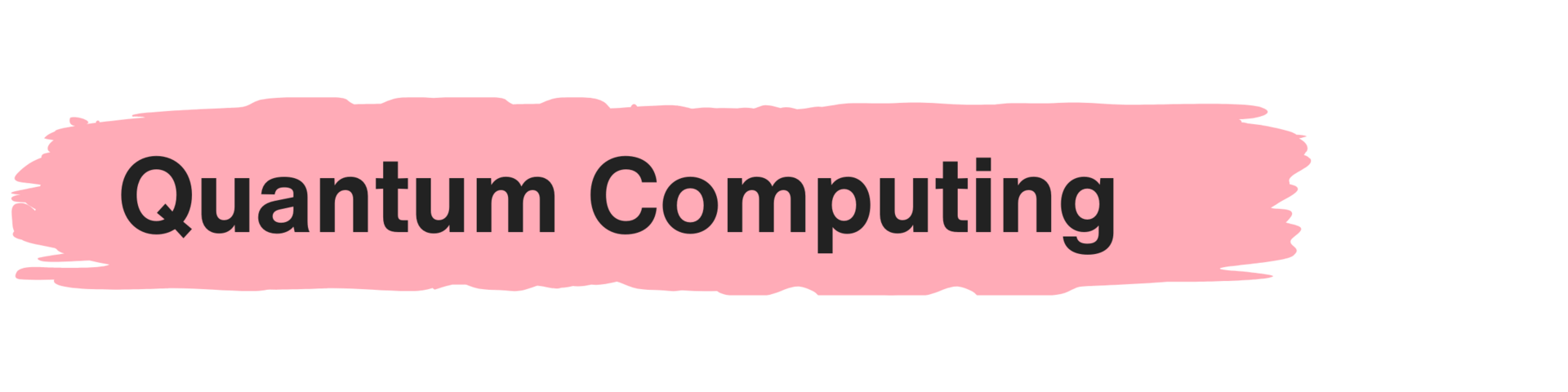

Google’s quantum computer, frozen to nearly absolute zero, or -273 degrees Celsius. (Science)

This is the most commonly discussed form of futuristic computation.

Compared to classical computing, quantum computers replace bits with qubits, which enable the computer to encode multiple states at once. Imagine flipping a coin: in the air, it is both heads and tails at once until you observe how it lands. This unique property allows quantum computers to process vast amounts of possibilities at once, making them especially powerful for solving problems that are too complex for traditional machines.

Material sciences can use quantum computing to simulate discoveries, while machine learning models can be fine-tuned via intricate quantum algorithms. There are further uses in modelling, such as finance firms developing better predictive models and supply chain companies better forecasting their operations. Physicists can also advance their research in nuclear, space, and energy.

Major Companies: IBM, Google, Microsoft, Amazon, Intel, NVIDIA, PsiQuantum, IonQ, Rigetti, Quantinuum, QuEra, Pasqal, Quantum Machines

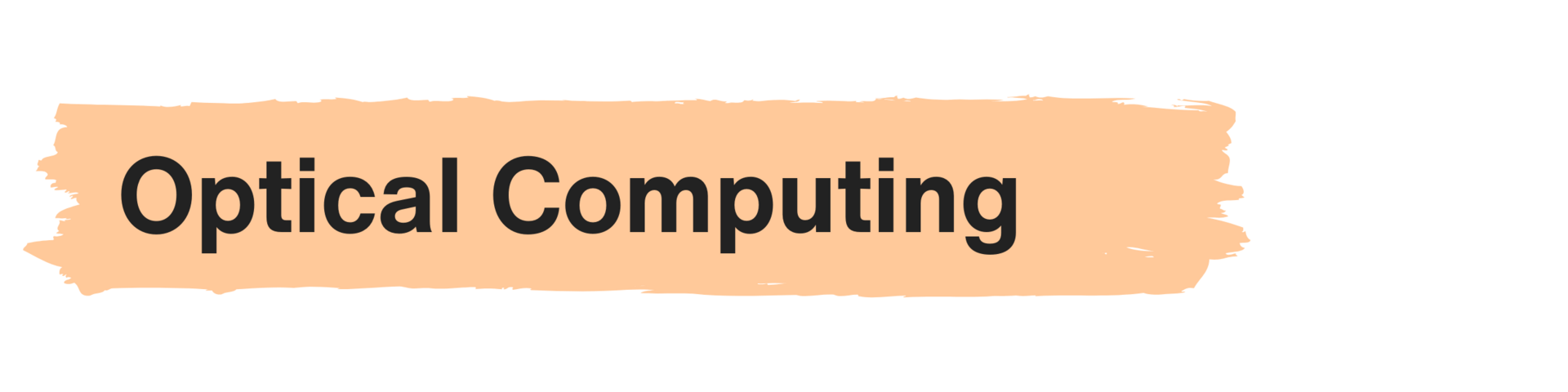

Lightmatter’s photonic PCI-E card. (Lightmatter)

Optical, or photonic, computers promise efficient computation by using the benefits of light, namely speed and heat. While far from mainstream adoption, given the development of the technology, opportunities in AI and machine learning exist that would use optical computers to accelerate model training and inference (the familiar step of actually getting results from the model). Some of the most prevalent applications today, however, include high-speed communication, greater signal and pattern recognition, and reduced energy consumption in high-performance computing.

Primary players: Intel, IBM, NVIDIA, Lightmatter, Lightelligence, Ayar Labs, Optalysys, PsiQuantum

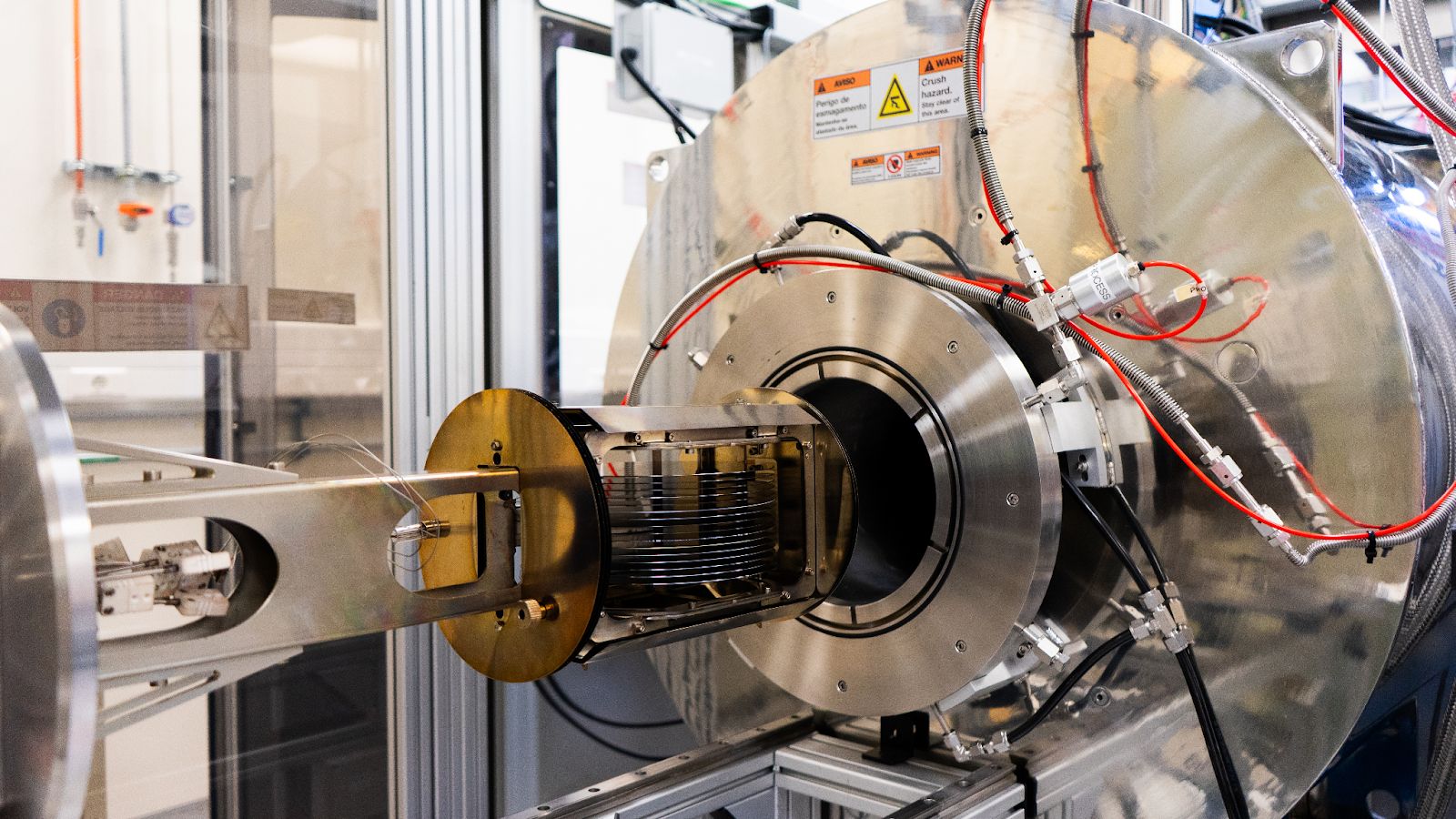

(International Iberian Nanotechnology Laboratory)

Spintronic computation looks at the spin of electrons, representing the binary logic 0s and 1s with the direction of electrons as being either at spin-up or spin-down. Theoretical proposals have been published to add additional spin states, such as angular spin or charge. This could enable similar capabilities as quantum computing if realized, but would be more limited. The primary benefit of spintronic computation is that, unlike conventional charge-based memory, spintronic memory retains data without power. This makes it more energy and time efficient than classical computers. Since all applications rely on storage and memory, companies can achieve more efficient storage, as well as additional security.

Primary players: Samsung, IBM, Western Digital, Everspin, Avalanche Technology

AKAT-1, an analog computer and the first transistorized differential equations analyzer. (Britannica)

Throwing out the standard paradigm of 0s and 1s, analog computers use continuous signals based on any value in a given range. These computers were originally used for general simulations like flight paths and weather forecasting, but are making a comeback for low-power AI and edge computing applications. Outside of very limited use cases, such as computing differential equations, analog computers offer no benefits over the classical computing paradigm.

Primary players: Texas Instruments, Analog Devices, Intel, IBM, STMicroelectronics, Mythic, GrAI Matter Labs, Aspinity, Analog Inference, BrainChip

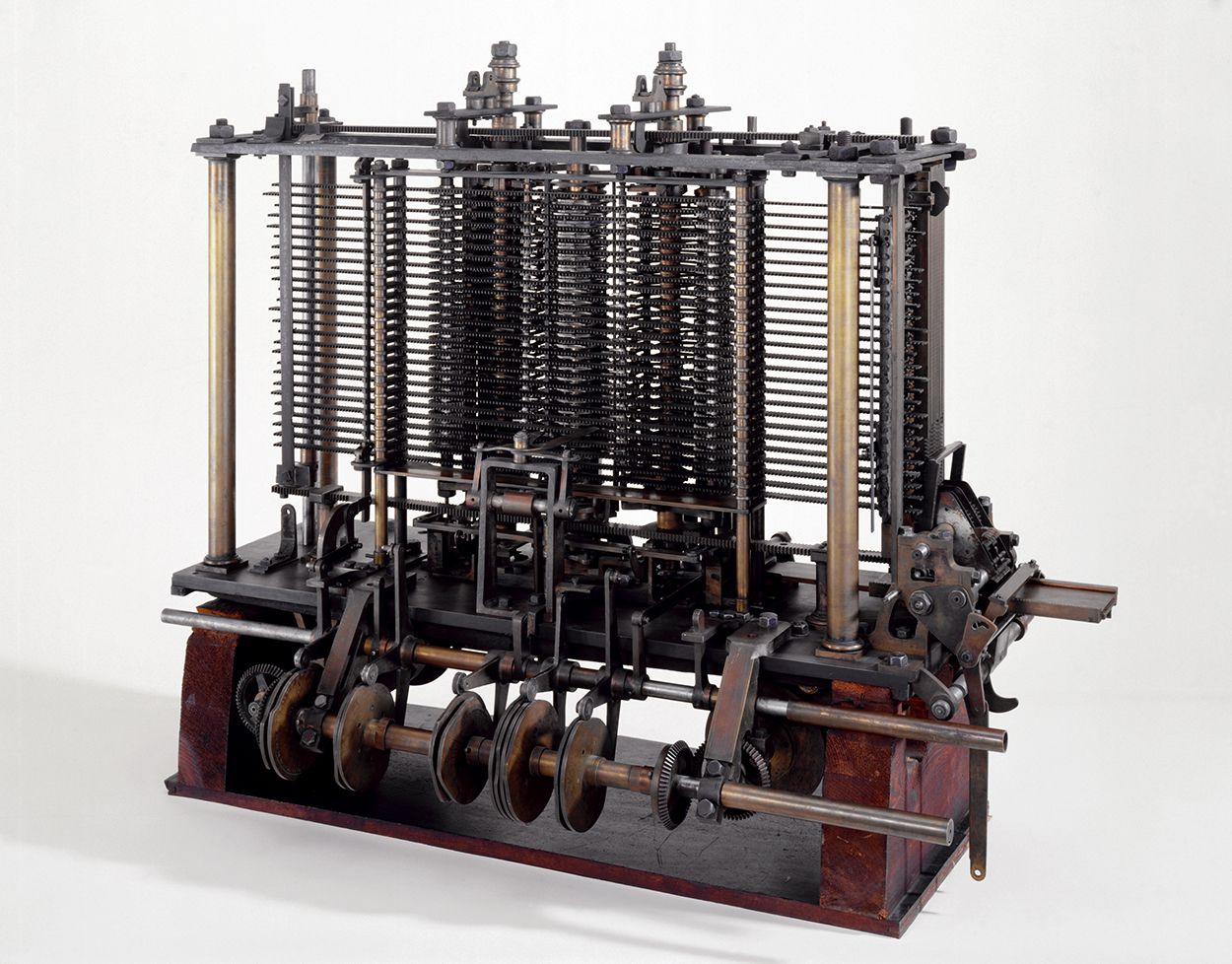

The analytical engine. (Science Museum)

Mechanical computation, which relies strictly on moving components such as gears, levers, and wheels, is the oldest form of computation, dating back centuries before electricity. Although it is obsolete for most modern purposes, there are niche applications where it is still in use. For example, it is not susceptible to radiation effects, making it great for nuclear and space purposes.

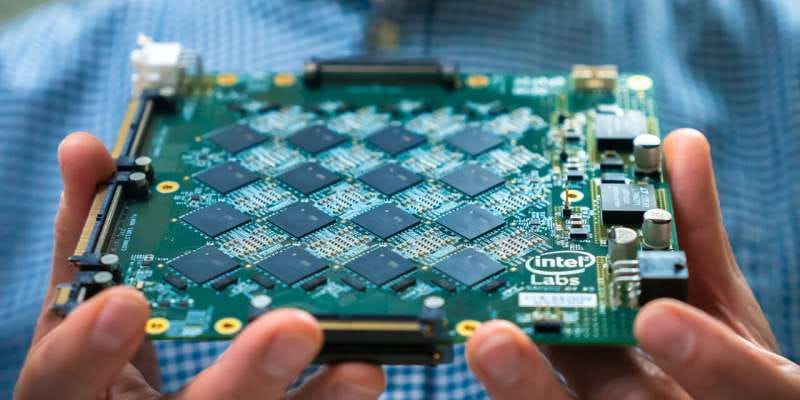

Intel’s Nahaku board, consisting of its Lohli neuromorphic chips. (Intel)

Neuromorphic computation is still silicone-based, but is designed to mimic our brains. Taking into account spikes and pulses akin to brain signals, it forgoes binary logic entirely. The primary benefits of neuromorphic computation are that it is event-driven, making it capable of learning on the fly, recognizing patterns, and making decisions like humans. This gives it strong potential for use with AI. There are use cases as well, such as with brain computer interfaces for converting brain waves into tangible actions and in robotics for imitating human movement.

Beyond these individual systems, hybrid models are emerging that combine the strengths of multiple paradigms. The most prevalent today are quantum-classical hybrids, where quantum processors tackle complex subproblems while classical systems handle orchestration and integration. Other combinations like analog-digital AI chips or neuromorphic-sensor stacks reflect a trend toward blending specialties rather than chasing one-size-fits-all solutions. Digital systems remain the foundation, but the future is likely to be modular, borrowing the best from many systems.

Some of the biggest winners in any industry are the base layer companies that provide the tools for other companies to build on top of. Take, for example, a consumer application like an AI chatbot. The frontend layer is hosted on a cloud-based service, such as AWS or Microsoft Azure. AWS and Azure rely on infrastructure providers such as NVIDIA, AMD, and Qualcomm to power their systems. Going one layer deeper, TSMC and Intel manufacture the fabs where the chips are fabricated. And at the foundational level, ASML creates the equipment for TSMC and Intel to create their fabs. Computational innovations will disrupt this stack at all levels.

AI is already facing bottlenecks. Model training becomes ever more resource-intensive, and with a race to develop the best models, companies are scrambling to ink deals that provide more resources, from servers, water for cooling, and energy. Other forms of computation will change the game, and those who develop the next generation of computers will have a tremendous edge in AI.

Beyond AI, however, there are other emerging applications. Biological and chemical problems we face as a society have a natural predisposition to be solved by biological and chemical computers. Disease has come to the forefront of the public consciousness following the pandemic, and with increasing attention on health, startups are pouring research and development dollars into treatments and cures. An increasingly volatile geopolitical world also leads to countries finding better methods of security and defense. With the U.S. government investing in quantum computers, there is speculation on the true potential of those computers. Even more experimental computers are showing promise, but have a long way to go before viability.

Looking past the classic B2B SaaS model, the startups redefining the future with DeepTech will be powered by the new wave of computers. There are many rounds being raised, like PsiQuantum’s $750 million round, Celestial AI $250 million round, and Quantum Machines $170 million Series C, all of which closed this year. With many other companies closing rounds, the money shows where investors see the future to be. Major generalist investors in the space include BlackRock, Founders Fund, Redpoint Ventures, Andreessen Horowitz, Kohlsa Ventures, and others. There are corporate funds deploying serious capital as well, such as M12 (Microsoft), Intel Capital, Samsung Catalyst Fund, NVIDIA’s Nventures, BASF Venture Capital, and Siemens Venture Capital. In addition, dedicated firms, such as Qubits Ventures, Quantonation, Playground Global, Cottonwood Technology Fund, and OS Fund, are also joining cap tables. While not an exhaustive list, both generalist firms and specific funds are raising and deploying capital into the computation layer.

As mentioned in previous articles, DeepTech is a booming sector, with more companies being founded and more dollars going towards those companies. We already see more mainstream companies such as IBM, Microsoft, and Google developing their own non-classical computers, with the intent to use them for commercial purposes. Other startups have started to incorporate non-traditional computers, such as brain-computer interface products or therapeutics.

Now that we have covered the foundation of DeepTech, we look forward to specific sector deep dives, special company features, and interviews with some of the best builders. As it is in startups, there is always a lot happening, so look to DeepCorner to distill the noise to create a clear picture.

SaaS is out, science is in.

The best way to support us is by checking out our sponsors. Our partner for this week is Apollo!

Looking forward to the next DeepCorner? |